Note: Beginning in Spring 2024, all course information—including syllabi, assignment descriptions, and supplementary course pages—is delivered via Canvas. For quick-reference as well as for public access, however, we have generated the following export of that content. Note that some of these links point to content within Canvas; if you are a student in the class, these links should take you to the appropriate in-Canvas content. If you are not a student, these links will not work; however, you can find the same content elsewhere here on this page.

Quick links to content within this page:

Syllabus

CS7637: Knowledge-Based AI

This page provides information about the Georgia Tech OMS CS7637 class on Knowledge-Based AI relevant only to the Spring 2024 semester. Note that this page is subject to change at any time. The Spring 2024 semester of the OMS CS7637 class will begin on January 8, 2024. Below, find the course’s calendar, grading criteria, and other information. For more complete information about the course’s requirements and learning objectives, please see the general CS7637 page.

Quick Links

To help with navigation, here are some of the links you’ll be using frequently in this course:

- Tools: Canvas | Peer Feedback

- Class Pages: CS7637 Home | Spring 2024 Syllabus | Recommended Reading List | Course FAQ | Full Course Calendar

- Assignments: Homework 1 | Homework 2 | Homework 3

- Mini-Projects: Mini-Project 1 | Mini-Project 2 | Mini-Project 3 | Mini-Project 4 | Mini-Project 5

- Raven’s Project: Project Overview | Milestone 1 | Milestone 2 | Milestone 3 | Milestone 4 | Final Project

- Exams: About the Exams | Exam 1 | Exam 2

- Participation: Class Participation

Course Calendar At-A-Glance

Below is the calendar for the Spring 2024 OMS CS7637 class. Note that assignment due dates are all Sundays at 11:59PM Anywhere on Earth time.

| Week # | Week Of | Lessons | Deliverable | Assignment Due Date |

| 1 | 01/08/2024 | 01, 02 | Start-of-Course Survey | 01/14/2024 |

| 2 | 01/15/2024 | 03, 04 | RPM Milestone 1 | 01/21/2024 |

| 3 | 01/22/2024 | 05, 06 | Mini-Project 1 | 01/28/2024 |

| 4 | 01/29/2024 | 07, 08 | Homework 1 | 02/04/2024 |

| 5 | 02/05/2024 | 09 | Mini-Project 2, Quarter-Course Survey | 02/11/2024 |

| 6 | 02/12/2024 | 10, 11 | RPM Milestone 2 | 02/18/2024 |

| 7 | 02/19/2024 | 12 | Exam 1 | 02/25/2024 |

| 8 | 02/26/2024 | 13, 14 | Homework 2 | 03/03/2024 |

| 9 | 03/04/2024 | 15, 16 | Mini-Project 3, Mid-Course Survey | 03/10/2024 |

| 10 | 03/11/2024 | 17, 18 | RPM Milestone 3 | 03/17/2024 |

| 11 | 03/18/2024 | 19, 20 | Mini-Project 4 | 03/24/2024 |

| 12 | 03/25/2024 | 21, 22 | Homework 3 | 03/31/2024 |

| 13 | 04/02/2024 | 23, 24 | Mini-Project 5 | 04/07/2024 |

| 14 | 04/08/2024 | 25 | RPM Milestone 4 | 04/14/2024 |

| 15 | 04/15/2024 | — | Final RPM Project | 04/21/2024 |

| 16 | 04/22/2024 | — | Exam 2 | 04/28/2024 |

| 17 | 04/29/2024 | 26 | End-of-Course Survey, CIOS Survey | 05/05/2024 |

Given above are the numeric labels for each lesson. For reference, here are those lessons’ titles, with the estimated time to complete each lesson in minutes in parentheses:

|

|

Course Assessments

Your grade in this class is generally made of five components: three homework assignments, five mini-projects, one large project, two exams, and class participation. Final grades will be calculated as an average of all individual grade components, weighted according to the percentages below. Students receiving a final average of 90 or above will receive an A; of 80 to 90 will receive a B; of 70 to 80 will receive a C; of 60 to 70 will receive a D; and of below 60 will receive an F. We do not plan to have a curve. It is intentionally possible for every student in the class to receive an A.

Homework (15%)

You will complete three homework assignments in this course—Homework 1, Homework 2, and Homework 3—each worth 5% of your average. Each homework assignment will have one question, which you will answer in a maximum of 5 pages. These questions will cover the course material and explore your understanding of key concepts from the lectures. All assignments should be written using JDF .

Mini-Projects (30%)

You will complete five mini-projects in this course—Mini-Projects 1, 2, 3, 4, and 5, each worth 6% of your average. Each mini-project asks you to implement some AI logic shown in the course lectures, although you are also welcome to attempt to solve the problems using other techniques. For each of the mini-projects, you will also provide a short write-up of your approach, mainly to share with classmates and look through others’ approaches. These write-ups should be written using JDF. You’ll submit the write-ups to Canvas and the code to Gradescope. Note that your write-up grade and your performance grade will be posted to separate categories on Canvas; each will show as being worth 15% to add to 30% for mini-projects as a whole.

Raven’s Project Milestones (15%) and Raven’s Final Project (15%)

The semester-long project is the Raven’s project, where you will write an agent that can solve problems on the Raven’s Progressive Matrices test. For the project, you will complete four milestones (1, 2, 3, and 4) throughout the semester, and then a final submission. The four milestones together are worth 15% of your average, and the final submission is worth another 15%. The milestones are there to ensure that you get started on the project early and have an opportunity to see your classmates’ approaches. Each milestone, as well as the final project submission, is graded half on performance and half on a written report. These write-ups should be written using JDF. You’ll submit the write-ups to Canvas and the code to Gradescope. Note that your write-up grade and your performance grade will be posted to separate categories on Canvas; each will show as being worth 7.5% (for milestone performance, milestone journals, project performance, and project journals) to add to 30% as a whole.

Exams (15%)

You will take two proctored exams in this class—Exam 1 and Exam 2—each worth 7.5% of your average. Each exam is 90 minutes long with up to 25 questions, all multiple-choice, multiple-correct with five choices and between 1 and 4 correct answers. Partial credit is awarded. Each exam will cover all lectures through the current week (for example, Exam 1 covers lessons 01 through 12). All exams are open-book, open-note, open-internet: everything except live interaction with another person. The tests are digitally proctored. Tests will open at least one week prior to the deadline, though they may be open earlier. For more information, check out the About the Exams page.

Class Participation (10%)

One of the major strengths of large online classes it the way they allow students to have significant impact on their classmates’ experiences. As such, 10% of your class grade and 10% of the time you spend on this class will be improving the course experience for other students. This is class participation credit, and it can be earned in various ways: participating on the class forum; participating in peer review; submitting annotated bibliographies for the course resources; submitting candidate exam questions; participating in other activities; completing course surveys; completing the secret survey by clicking the hidden link here before the end of week 2 to indicate you read the entire syllabus; and more. There may be other mechanisms to earn participation points announced throughout the semester; check the course forum for that!

Course Policies

The following policies are binding for this course.

Official Course Communication

You are responsible for knowing the following information:

- Anything posted to this syllabus (including the pages linked from here, such as the general course landing page).

- Anything emailed directly to you by the teaching team (including announcements via the course forum or Canvas), 24 hours after receiving such an email.

Generally speaking, we will post announcements via Canvas and cross-post their content to the course forum; you should thus ensure that your Canvas settings are such that you receive these announcements promptly, ideally via email (in addition to other mechanisms if you’d like). Georgia Tech generally recommends students to check their Georgia Tech email once every 24 hours. So, if an announcement or message is time sensitive, you will not be responsible for the contents of the announcement until 24 hours after it has been sent.

Note that this means you won’t be responsible for knowing information communicated in several other methods we’ll be using. You aren’t responsible for knowing anything posted to the course forum that isn’t linked from an official announcement. You aren’t responsible for anything said in Slack or other third-party sites we may sometimes use to communicate with students. You don’t need to worry about missing critical information so long as you keep up with your email and understand the documents on this web site. This also applies in reverse: we do not monitor message boxes in Canvas, and we may not respond to direct emails. If you need to get in touch with the course staff, please post privately to the course forum (either to all Instructors or to an instructor individually).

Communicating with Instructors and TAs

Communication with the course teaching team should be handled via the discussion forum. If your question is relevant to the entire class, you should ask it publicly; if your question is specific to you, such as a question about your specific grade or submission, you should ask it privately.

Our workflow is to regularly filter the forum for Unresolved posts, which includes top-level threads with no answer accepted by the original poster, as well as mega-threads with unresolved follow-ups. If your question requires an official answer or follow-up from an instructor or teaching assistant, make sure that it is posted as either a Question or as a follow-up to a mega-thread, and that it is marked Unresolved. Once an instructor or TA has answered your question, it will automatically be marked as Resolved; if you require further assistance, you are welcome to add a follow-up, but make sure to unmark the question as Resolved in order to make sure that it is seen by a member of the teaching team.

Similarly, in order to keep the forum organized, please post as a Post or Note instead of a Question if your question does not require an official response from the teaching team. For example, if you are interested in getting multiple perspective from classmates, getting feedback on your ideas, or having a discussion that does not have a single answer, please use Post or Note instead of Question. Please reserve Question threads for questions that will likely have a single official response. TAs and instructors will regularly convert Questions to Posts or Notes that do not need a single official answer, but it will save time and allow them to focus their attention on other students if you correctly categorize your post in the first place.

Late Work

Running such a large class involves a detailed workflow for assigning assignments to graders, grading those assignments, and returning those grades. As such, work that does not enter into that workflow presents a major delay. We have taken steps to limit as much as possible the need to ever submit work late: we have made the descriptions of all assignments available on the first day of class so that if there are expected interruptions (such as like weddings, business trips, and conferences), you can complete the work ahead of time. If you have technical difficulties submitting the assignment to Canvas by the deadline, post privately to the course forum immediately and attach your submission. Then, submit it to Canvas as soon as you can thereafter.

If due to a personal emergency, health emergency, family emergency, or other unforeseeable life event you find you are unable to complete an assignment on time, please post privately to the course forum with information regarding the emergency. Depending on your unique situation, we will share guidance on how to proceed; if the emergency is projected to delay a significant quantity of the work required for the class, we may recommend withdrawing and reattempting the class at a later date. If the emergency will likely only impact a small amount of the course, we may be able to accept the work late as a one-time exception. If the emergency takes place once you have already completed a significant fraction of the coursework, we may offer an Incomplete grade to allow you to finish the class after the semester is over.

Note that depending on the nature and significance of the request, we may require documentation from the Dean of Students office that the emergency is sufficient to justify offering an incomplete grade or accepting late work. Note also that regardless of the reason, we also cannot promise any particular turnaround time for grading work that was approved to be submitted late; it may be that grades and feedback will not be returned before the end of the term, and it may be that a temporary grade of Incomplete must be entered to leave time to grade work that was accepted late.

If you are not comfortable sharing with us the nature of an emergency, or if you need more comprehensive advocacy, we ask you to go through the Dean of Students’ office regarding class absences. The Dean of Students is equipped to address emergencies that we lack the resources to address. Additionally, the Dean of Students office can coordinate with you and alert all your classes together instead of requiring you to contact each professor individually. The Dean of Students is there to be an advocate and partner for you when you’re in a crisis; we wholeheartedly recommend taking advantage of this resource if you are in need. You may find information on contacting the Dean of Students with regard to personal emergencies here: https://studentlife.gatech.edu/request-assistance

Academic Honesty

All students in the class are expected to know and abide by the Georgia Tech Academic Honor Code (Links to an external site.). Specifically for us, the following academic honesty policies are binding for this class:

First, for essays, journals, and reports:

- In written essays, all sources are expected to be cited according to APA style. When directly quoting another source, both in-line quotation marks, an in-line citation, and a reference at the end of the document are required. When directly summarizing another source in your own words, quotation marks are not needed, but an in-line citation and reference at the end of your document are still required. You should consult the Purdue OWL Research and Citation Resources for proper citation practices, especially the following pages: Quoting, Paraphrasing, and Summarizing, Paraphrasing, Avoiding Plagiarism Overview, Is It Plagiarism?, and Safe Practices. You should also consult our dedicated pages (from another course) on how to use citations and how to avoid plagiarism.

- Any non-original figures must similarly be cited. If you borrow an existing figure and modify it, you must still cite the original figure. It must be obvious what portion of your submission is your own creation.

- In written essays, you may not copy any content from any current or previous student in this class, regardless of whether you cite it or not.

- There is one exception to these policies: unless you are quoting the course videos directly, you are not required to cite content borrowed from the course itself (such as figures in videos, topics in the video, etc.). The assumption is that the reader knows what you write is based on your participation in this class, thus references to course material are not inferred to be claiming credit for the course content itself.

Second, for code on course projects:

- You may not under any circumstances copy any code from any current or former student in the class, or from any public project addressing the same content as the course projects, such as the Raven’s Progressive Matrices or a Block World agent.

- The only code segments you are permitted to borrow are isolated project-agnostic functions, meaning functions which serve a purpose that makes sense outside the context of our projects (such as, for example, inverting colors in an image). Include a link to the original source of the code and clearly note where the copied code begins and ends (for example, with

/* BEGIN CODE FROM (source link) */before and/* END CODE FROM (source link) */after the copied code). This is partially to emphasize what your unique project and deliverable is, and partially to protect against instances where you and a classmate both borrowed a function from the same external repository. Note that annotating and attributing code is far easier than asking a TA if you need to attribute—if you need to ask, attribute it.

Third, for proctored assessments:

- During all proctored assessments, you are prohibited from interacting directly with any other person on the topic of the exam material. This includes posting on forums, sending emails or text messages, talking in person or on the phone, or any other mechanism that would allow you to receive live input from another person.

- During all proctored assessments, you may only use the device on which you are completing the assessment; you may not use other devices, even during open-book, open-note assessments as it is not possible to know whether secondary devices are being used to consult resources or to interact with others. This means that the result of using any keyboard and mouse should be observable in the session recording.

- Finally, you may not take any content contained on proctored assessments out of the proctored assessment, such as writing down exam questions, taking screenshots, or sharing information with classmates. Any attempt to retain a copy of exam content, or to obtain or consult exam content retained by someone else, will be treated as academic misconduct.

These policies, including the rules on all pages linked in this section, are binding for the class. Any violations of this policy will be subject to the institute’s Academic Integrity procedures, which may include a 0 grade on assignments found to contain violations; additional grade penalties; and academic probation or dismissal.

Finally, note that you may not post the work that you submit for this class publicly either during or after the semester is concluded. We understand that the work you submit for this class may be valuable for job opportunities, personal web sites, etc.; you are welcome to write about what you did for this class, and to provide the actual work privately when requested, but we ask that you do not make your actual submissions or code publicly available; this is to reduce the likelihood of future students plagiarizing your work. Similarly, unless you notify us otherwise, by participating in this class you authorize us to pursue the removal of your content if it is discovered on any public assignment repositories, especially if it is clearly contributed there by someone else.

Note that if you are accused of academic misconduct, you are not permitted to withdraw from the class until the accusation is resolved; if you are found to have participated in misconduct, you will not be allowed to withdraw for the duration of the semester. If you do so anyway, you will be forcibly re-enrolled without any opportunity to make up work you may have missed while illegally withdrawn.

AI Collaboration Policy

Recent advancements in artificial intelligence—Copilot, ChatGPT, etc.—can be great resources for improving your learning in the course, but it is important to ensure that their benefits are targeted at your learning rather than solely at your deliverables. Toward that end, the same academic integrity policy above applies to AI assistance: you are welcome to consult with AI agents just as you would consult with classmates, discuss ideas with friends, and seek feedback from colleagues. However, just as you would not hand your device to someone else to directly fix or improve your classwork, so also you may not copy anything directly from an AI agent into your document, nor let an AI agent directly generate content for your submission. This rule means you should disable any AI assistance more advanced than a grammar checker inside your word processors and IDEs.

Although you are prohibited from having these tools directly integrated into your workspace or from copying content from these assistants directly into your work, you are nonetheless permitted to use them more generally. The important consideration is to ensure that you are using the AI agent as a learning assistant rather than as a homework assistant: so long as your submission solely reflects your own understanding of the content, you are encouraged to let AI assistants aid in developing your understanding.

Feedback

Every semester, we make changes and tweaks to the course formula. As a result, every semester we try some new things, and some of these things may not work. We ask your patience and support as we figure things out, and in return, we promise that we, too, will be fair and understanding, especially with anything that might impact your grade or performance in the class. Second, we want to consistently get feedback on how we can improve and expand the course for future iterations. You can take advantage of the feedback box on the course forum (especially if you want to gather input from others in the class), give us feedback on the surveys, or contact us directly via private the course forum messages.

For other questions, please first check out the Course FAQ.

Course Calendar

This class has a lot of moving parts: lectures, homeworks, projects, tests, surveys, peer review, participation, and more. It can be a lot to track, so this calendar provides a canonical list of everything you need to do on a weekly basis. If you check off all these tasks and deliverables, you’ve completed the coursework. Numbers in parentheses give the approximate amount of time we expect each task to require (in hours).

Note that we differentiate work on RPM Milestones from work on the project more generally; even after finishing Milestone 2, for instance, you will likely spend time further improving your agent’s performance on the problems covered by Milestone 2 in order to improve for the final project submission at the end of the term.

| Week | Tasks | Deliverables | Deadline |

| 1 |

| 01/14/2024 | |

| 2 |

| 01/21/2024 | |

| 3 |

|

| 01/28/2024 |

| 4 |

|

| 02/04/2024 |

| 5 |

|

| 02/11/2024 |

| 6 |

|

| 02/18/2024 |

| 7 |

|

| 02/25/2024 |

| 8 |

| 03/03/2024 | |

| 9 |

|

| 03/10/2024 |

| 10 |

|

| 03/17/2024 |

| 11 |

|

| 03/24/2024 |

| 12 |

|

| 03/31/2024 |

| 13 |

|

| 04/07/2024 |

| 14 |

|

| 04/14/2024 |

| 15 |

|

| 04/21/2024 |

| 16 |

|

| 04/28/2024 |

| 17 |

| 05/05/2024 |

RPM Project Overview

RPM Project Overview

Our semester-long class project involves constructing an AI agent to address a human intelligence test. The project is due at the end of the semester, but there are a number of required milestones to pass along the way. These are (a) to ensure that you are getting an early enough start to have a chance for success and (b) to give you opportunities to see your classmates’ approaches and possibly incorporate their ideas into your own project.

This page covers the project as a whole, emphasizing what your end-goal is for the end of the semester.

In a (Large) Nutshell

The CS7637 class project is to create an AI agent that can pass a human intelligence test. You’ll download a code package that contains the boilerplate necessary to run an agent you design against a set of problems inspired by the Raven’s Progressive MatricesLinks to an external site. test of intelligence. Within it, you’ll implement the Agent.py file to take in a problem and return an answer.

There are four sets of problems for your agent to answer: B, C, D, and E. Each set contains four types of problems: BasicLinks to an external site., Test, ChallengeLinks to an external site., and Raven’s. You’ll be able to see the Basic and Challenge problems while designing your agent, and your grade will be based on your agent’s answers to the Basic and Test problems. The milestones throughout the semester will carry you through tackling more and more advanced problems: for Milestone 1, you’ll just familiarize yourself with the submission process and data structures. For Milestone 2, you’ll target the first set of problems, the relatively easy 2x2 problems from Set B. For Milestone 3, you’ll move on to the second set of problems, the more challenging 3x3 problems from Set C. For Milestone 4, you’ll look at the more difficult Set D and Set E problems, building toward the final deliverable a bit later.

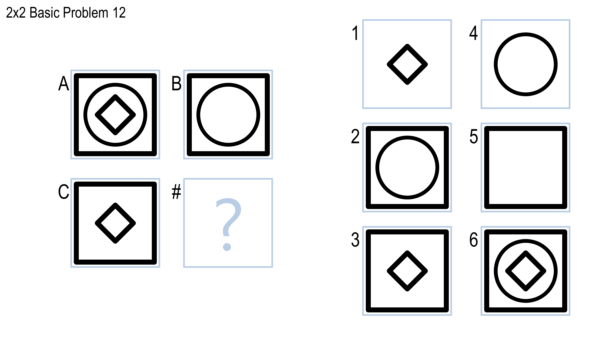

For all problems, your agent will be given images that represent the problem in .png format. An example of a full problem is shown below; your agent would be given separate files representing the contents of squares A, B, C, 1, 2, 3, 4, 5, and 6.

Don’t worry if the above doesn’t make sense quite yet — the projects are a bit complex when you’re getting started. The goal of this section is just to provide you with a high-level view so that the rest of this document makes a bit more sense.

Background and Goals

This section covers the learning goals and background information necessary to understand the projects.

Learning Goals

One goal of Knowledge-Based Artificial Intelligence is to create human-like, human-level intelligence, and to use that to reflect on how humans actually think. If this is the goal of the field, then what better way to evaluate intelligence of an agent than by having it take the same intelligence tests that humans take?

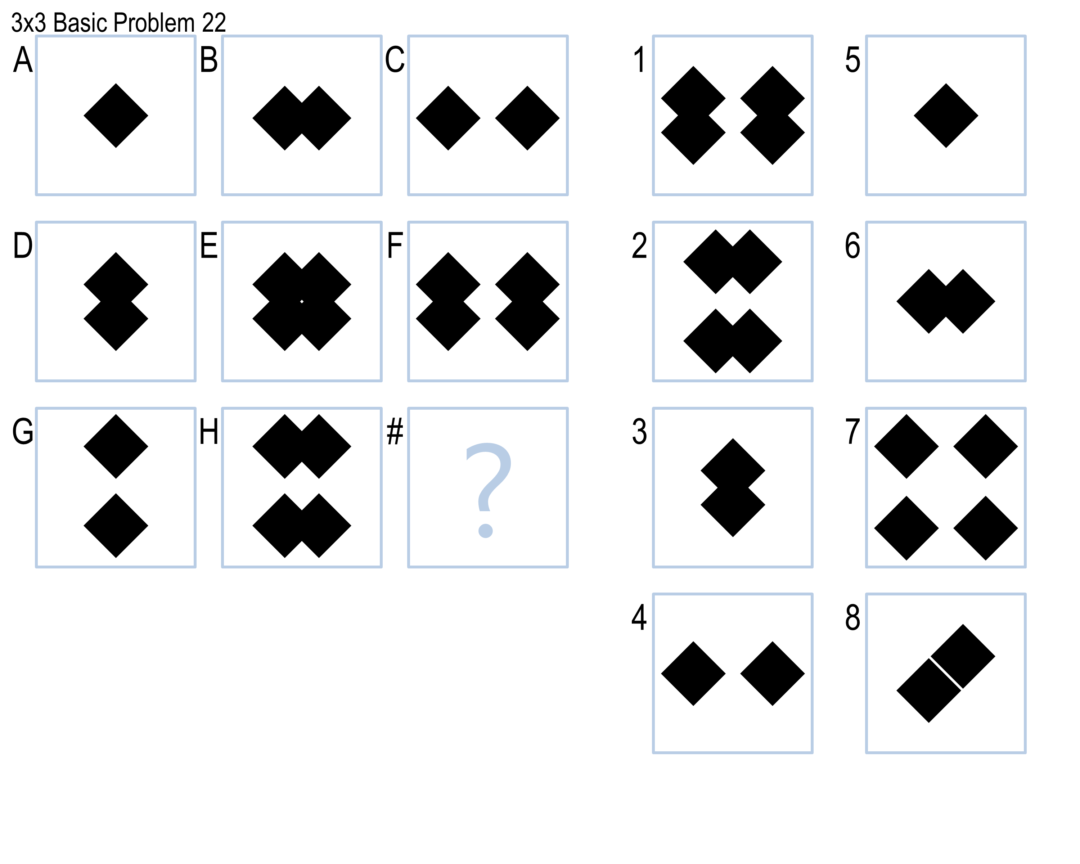

There are numerous tests of human intelligence, but one of the most reliable and commonly-used is Raven’s Progressive Matrices. Raven’s Progressive Matrices, or RPM, are visual analogy problems where the test-taker is given a matrix of figures and asked to select the figure that completes the matrix. An example of a 2x2 problem was shown above; an example of a 3x3 problem is shown below.

In these projects, you will design agents that will address RPM-inspired problems such as the ones above. The goal of this project is to authentically experience the overall goals of knowledge-based AI: to design an agent with human-like, human-level intelligence; to test that agent against a set of authentic problems; and to use that agent’s performance to reflect on what we believe about human cognition. As such, you might not use every topic covered in KBAI on the projects; the topics covered give a bottom-up view of the topics and principles KBAI, while the project gives a top-down view of the goals and concepts of KBAI.

About the Test

The full Raven’s Progressive Matrices test consists of 60 visual analogy problems divided into five sets: A, B, C, D, and E. Set A is comprised of 12 simple pattern-matching problemsLinks to an external site. which we won’t cover in these projects. Set B is comprised of 12 2x2 matrix problems, such as the first image shown above. Sets C, D, and E are each comprised of 12 3x3 matrix problems, such as the second image shown above. Problems are named with their set followed by their number, such as problem B-05 or C-11. The sets are of roughly ascending difficulty.

For copyright reasons, we cannot provide the real Raven’s Progressive Matrices test to everyone. Instead, we’ll be giving you sets of problems — which we call “Basic” problems — inspired by the real RPM to use to develop your agent. Your agent will be evaluated based on how well it performs on these “Basic” problems, as well as a parallel set of “Test” problems that you will not see while designing your agent. These Test problems are directly analogous to the Basic problems; running against the two sets provides a check for generality and overfitting. Your agents will also run against the real RPM as well as a set of Challenge problems, but neither of these will be factored into your grade.

Overall, by the end of the semester, your agent will answer 192 problems. More on the specific problems that your agent will complete are in the sections that follow.

Each problem set (that is, Set B, Set C, Set D, and Set E) consists of 48 problems: 12 BasicLinks to an external site., 12 Test, 12 Raven’s, and 12 ChallengeLinks to an external site.. Only Basic and Test problems will be used in determining your grade. The Raven’s problems are run for authenticity and analysis, but are not used in calculating your grade.

In designing your agent, you will have access to the Basic and Challenge problems; you may run your agent locally to check its performance on these problems. You will not have access to the Test or Raven’s problems while designing and testing your agent: when you upload your agent to Gradescope, you will see how well it performs on those problems, but you will not see the details of the problems themselves. Challenge and Raven’s problems are not part of your grade.

Note that the Challenge problems will often be used to expose your agent to extra properties and shapes seen on the real Raven’s problems that are not covered in the Basic and Test problems. The problems themselves generally ascend in difficulty from set to set (although many people reflect that Set E is a bit easier than Set D.

Details & Deliverables

This section covers the more specific details of the four project milestones, as well as the final project you will submit.

Project Milestones

Your ultimate goal is to submit a final project that attempts all 192 problems. However, to help ensure that you start early and to give you an opportunity to see and learn from your classmates’ approaches, there are four intermediate milestones. On each of these milestones, your agent will only run against a subset of the full set of problems to allow you to test more efficiently. You will also write a brief report on your current approach for each milestone; the primary purpose of these reports will be to help you get feedback from classmates and see their approaches. For each milestone, you will be graded on a combination of your agent’s performance and the report that you write; the bars for performance on the milestones are relatively low, however, as the goal is to ensure that you are getting started early.

Each milestone has its own page. In brief, however:

- Milestone 1: Set B, Basic Problems only. The goal of this milestone is simply to ensure you’ve set up your local project infrastructure and familiarized yourself with Gradescope. You will receive 100% of your performance credit as long as your agent answers any problem correctly. Your report will focus on early ideas you have for approaching the project.

- Milestone 2: Set B, all problems. The goal of this milestone is to ensure you have started on the early, easier problems early in the semester. As long as your agent can answer 5 (out of 12) Basic B and 5 (out of 12) Test B problems correctly, you will receive full performance credit.

- Milestone 3: Set C. The goal of this milestone is to ensure you have generalized your approach out to the more difficult 3x3 problems by an appropriate time of the semester. As long as your agent can answer 5 (out of 12) Basic C and 5 (out of 12) Test C problems correctly, you will receive full performance credit.

- Milestone 4: Sets D and E. The goal of this milestone is to ensure you have looked at all four sets before the final project deadline, so that you may spend the last portion of the semester refining, improving, and writing your final report. As long as your agent can answer 10 (out of 24) Basic D & E and 10 (out of 24) Test D & E problems, you will receive full performance credit.

For each milestone, your code must be submitted to the autograder by the deadline. However, it is okay if your project is still running after the deadline. Note that Gradescope by default counts your last submission for a grade; if you want to count an earlier submission, you must activate that earlier submission.

On each milestone, your grade will be 50% meeting the performance expectations and 50% the report you write up. You will submit your agent to Gradescope and your report to Canvas as a PDF. The four milestones together are 15% of your course grade; each is thus 3.75% of your course grade.

Final Project

The final project will run against all 192 problems. You can submit to the final project throughout the semester to see how your agent is doing so far, but you should make sure to submit to the Milestone submissions as well.

More information about the final project is available on the final project page. For the final project, you will write a longer, more formal, and more complete report on your project. Your score will be based on raw performance on the Basic and Test problems. Like the milestones, performance will be 50% of your grade and your report will be 50% of your grade. Your final project is 15% of your course grade.

Getting Started

To make it easier to start the project and focus on the concepts involved (rather than the nuts and bolts of reading in problems and writing out answers), you’ll be working from an agent framework in Python.

- Download RPM-Project-Code. as a zip file.

You will place your code into the Solve method of the Agent class supplied. You can also create any additional methods, classes, and files needed to organize your code; Solve is simply the entry point into your agent.

The Problem Sets

As mentioned previously, by the final project, your agent will run against 192 problems: 4 Sets of 48 problems. Each of the 4 Sets is broken down into four subsets of 12: 12 Basic, 12 Test, 12 Raven’s, and 12 Challenge.

You can see the Basic and Challenge problems and test your agent’s performance on them locally. You cannot see the Test and Raven’s problems, and your agent’s performance will only be tested when you submit to Gradescope. Your grade will be based solely on the Basic and Test problems.

The Raven’s problems are used so that you can see how your agent is performing on the real Raven’s test. The Challenge problems are primarily there to expose your agent to certain details that are present in the Raven’s problems but not in the Basic problems (such as shapes shaded with diagonal lines).

Within each set, the Basic, Test, and Raven’s problems are constructed to be roughly analogous to one another. The Basic problem is constructed to mimic the relationships and transformations in the corresponding Raven’s problem, and the Test problem is constructed to mimic the Basic problem very, very closely. So, if you see that your agent gets Basic problem B-05 correct but Test and Raven’s problems B-05 wrong, you know that might be a place where your agent is either overfitting or getting lucky. This also means you can anticipate your agent’s performance on the Test problems relatively well: each Test problem uses a near-identical principle to the corresponding Basic problem. In the past, agents have averaged getting 85% as many Test problems right as Basic problems, so there’s a pretty good correlation there if you’re using a robust, general method.

The Problems

You are provided with the BasicLinks to an external site. and Challenge Links to an external site.problems to use in designing your agent. The Test and Raven’s problems are hidden and will only be used when grading your project. This is to test your agents for generality: it isn’t hard to design an agent that can answer questions it has already seen, just as it would not be hard to score well on a test you have already taken before. However, performing well on problems you and your agent haven’t seen before is a more reliable test of intelligence. Your grade is based solely on your agent’s performance on the Basic and Test problems.

All problems are contained within the Problems folder of the downloadable. Problems are divided into sets, and then into individual problems. Each problem’s folder has four types of things:

- The problem itself, for your benefit.

- A ProblemData.txt file, containing information about the problem, including its type (the two booleans are for internal usage to distinguish whether the problem has Visual representations which will always be true and whether or not the problem has descriptive data which is not in use at this time)

- A ProblemAnswer.txt file containing the answer to the problem.

- Visual representations of each figure, named A.png, B.png, etc.

You should not attempt to access ProblemData.txt or ProblemAnswer.txt directly; their filenames will be changed when we grade projects. Generally, you need not worry about this directory structure; all problem data will be loaded into the RavensProblem object passed to your agent’s Solve method, and the filenames for the different visual representations will be included in their corresponding RavensFigures.

Working with the Code

The framework code is available under Getting Started above. You may modify ProblemSetList.txt to alter what problem sets your code runs against locally; this will be useful early in the term when you probably do not need to bother thinking about later problem sets yet. This will not affect what it runs against on Gradescope.

The Code

The downloadable package has a number of Python files: RavensProject, ProblemSet, RavensProblem, RavensFigure, and Agent. Of these, you should only modify the Agent class. You may make changes to the other classes to test your agent, write debug statements, etc. However, when we test your code, we will use the original versions of these files as downloaded here. Do not rely on changes to any class except for Agent to run your code. In addition to Agent, you may also write your own additional files and classes for inclusion in your project.

In Agent, you will find two methods: a constructor and a Solve method. The constructor will be called at the beginning of the program, so you may use this method to initialize any information necessary before your agent begins solving problems. After that, Solve will be called on each problem. You should write the Solve method to return its answer to the given question:

- 2x2 questions have six answer options, so to answer the question, your agent should return an integer from 1 to 6.

- 3x3 questions have eight answer options, so your agent should return an integer from 1 to 8.

- If your agent wants to skip a question, it should return a negative number. Any negative number will be treated as your agent skipping the problem.

You may do all the processing within Solve, or you may write other methods and classes to help your agent solve the problems.

When running, the program will load questions from the Problems folder. It will then ask your agent to solve each problem one by one and write the results to ProblemResults.csv. You may check ProblemResults.csv to see how well your agent performed. You may also check SetResults.csv to view a summary of your agent’s performance at the set level.

The Documentation

- RavensProject: The main driver of the project. This file will load the list of problem sets, initialize your agent, then pass the problems to your agent one by one.

- RavensGrader: The grading file for the project. After your agent generates its answers, this file will check the answers and assign a score.

- Agent: The class in which you will define your agent. When you run the project, your Agent will be constructed, and then its Solve method will be called on each RavensProblem. At the end of Solve, your agent should return an integer as the answer for that problem (or a negative number to skip that problem).

- ProblemSet: A list of RavensProblems within a particular set.

- RavensProblem: A single problem, such as the one shown earlier in this document. A RavensProblem includes:

- A Dictionary of the individual Figures (that is, the squares labeled “A”, “B”, “C”, “1”, “2”, etc.) from the problem. The RavensFigures associated with keys “A”, “B”, and “C” are the problem itself, and those associated with the keys “1”, “2”, “3”, “4”, “5”, and “6” are the potential answer choices.

- A String representing the name of the problem and a String representing the type of problem (“2x2” or “3x3”).

- RavensFigure: A single square from the problem, labeled either “A”, “B”, “C”, “1”, “2”, etc., containing a filename referring to the visual representation (in PNG form) of the figure’s contents

The documentation is ultimately somewhat straightforward, but it can be complicated when you’re initially getting used to it. The most important things to remember are:

- Every time Solve is called, your agent is given a single problem. By the end of Solve, it should return an answer as an integer. You don’t need to worry about how the problems are loaded from the files, how the problem sets are organized, or how the results are printed. You need only worry about writing the Solve method, which solves one question at a time.

- RavensProblems have a dictionary of RavensFigures, with each Figure representing one of the image squares in the problem and each key representing its letter (squares in the problem matrix) or number (answer choices). All RavensFigures have filenames so your agent can load the PNG with the visual representation.

Libraries

The permitted libraries for this term’s project are:

- The Python image processing library Pillow (version 10.0.0). For installation instructions on Pillow, see this pageLinks to an external site..

- The Numpy library (1.25.2 at time of writing). For installation instructions on numpy, see this page.

- OpenCV (4.6.0, opencv-contrib-python-headless (4.6.0.66 at the time of writing)). For installation instructions, see this page.

Additionally, we use Python 3.10.12 for our autograder.

Submitting Your Code

This class uses Gradescope, a server-side autograder, to evaluate your submission. This means you can see how your code is performing against the Test and Raven’s problems even without seeing the problems themselves. You will have access to separate areas to submit against the Milestone checks and to submit for the final project.

Submitting

To get started submitting your code, go to Canvas and click Gradescope on the left sidebar. Then, click CS 4635/7637 in the page that loads.

You will see five project options: Milestone 1, Milestone 2, Milestone 3, Milestone 4 and Final Project.

Milestone 1 will run your code only against the Basic B problems. Milestone 2 will run your code only against the Basic B, Test B, Challenge B, and Raven’s B problems. Milestone 3 will run your code against the Basic C, Test C, Challenge C, and Raven’s C problems. Milestone 4 will run your code against the Basic D+E, Test D+E, Challenge D+E, and Raven’s D+E problems.

To submit your code, drag and drop your project files (Agent.py and any support files you created; you should not submit the other files that we supplied) into the submission window. You may also zip your code up and upload the zip file. You’ll receive a confirmation message if your submission was successful; otherwise, take the corrective action specified in the error message and resubmit.

Getting Your Results

Then, wait for the autograder to finish. If there are no errors, you will see a detailed summary of your results. Each row in the test result output is the result of a single problem from the problem set: you’ll see a score of Pass label if your agent answered the question correctly, and a Fail label if your agent did not answer the question correctly. Your overall score can be found at the top right of the screen and in the summary at the bottom of the test results.

If you’d like to resubmit your code, click “Resubmit” found at the bottom-right corner of the autograder results page. You can analyze and compare your submission results using the “Submission History” button on this page, too.

Selecting Your Results

Once you have made your last submission, click Submission History, and then click Active next to your best submission. This is the only way to commit your final score to Canvas and get points; Gradescope does not select your best score automatically. You must do this to receive points for your submission.

After the deadline, we will import your score to Canvas to use in calculating your final score on that milestone or the project.

Permitted Approaches

Generally speaking, you are allowed to pursue any approach that authentically attempts to answer the problems based solely on the content of the frames. You can mimic how a human would approach it, take a computer vision approach, or implement other mechanisms to reason over the problems.

You are not, however, permitted to use any approach that essentially involves probing the autograder for information about the correct answer solely for the purpose of identifying the same answer again on a resubmission. For example, you may not use any approach that deterministically reshuffling the answer frames such that you know the correct answer is guaranteed to land in a certain spot, then hardcoding that spot into your agent.

There are features in the autograder now to prevent these sorts of solutions from working, but there may still be ways around them. If you want to stress test such approaches against the autograder and let us know the results, you’re welcome to: just make sure your final submission uses a more authentic approach.

Relevant Resources

Goel, A. (2015). Geometry, Drawings, Visual Thinking, and Imagery: Towards a Visual Turing Test of Machine IntelligenceLinks to an external site.. In Proceedings of the 29th Association for the Advancement of Artificial Intelligence Conference Workshop on Beyond the Turing Test. Austin, Texas.

McGreggor, K., & Goel, A. (2014). Confident Reasoning on Raven’s Progressive Matrices TestsLinks to an external site.. In Proceedings of the 28th Association for the Advancement of Artificial Intelligence Conference. Québec City, Québec.

Kunda, M. (2013). Visual problem solving in autism, psychometrics, and AI: the case of the Raven’s Progressive Matrices intelligence testLinks to an external site.. Doctoral dissertation.

Emruli, B., Gayler, R. W., & Sandin, F. (2013). Analogical mapping and inference with binary spatter codes and sparse distributed memoryLinks to an external site.. In Neural Networks (IJCNN), The 2013 International Joint Conference on. IEEE.

Little, D., Lewandowsky, S., & Griffiths, T. (2012). A Bayesian model of rule induction in Raven’s progressive matricesLinks to an external site.. In Proceedings of the 34th Annual Conference of the Cognitive Science Society. Sapporo, Japan.

Kunda, M., McGreggor, K., & Goel, A. K. (2012). Reasoning on the Raven’s advanced progressive matrices test with iconic visual representationsLinks to an external site.. In 34th Annual Conference of the Cognitive Science Society. Sapporo, Japan.

Lovett, A., & Forbus, K. (2012). Modeling multiple strategies for solving geometric analogy problemsLinks to an external site.. In 34th Annual Conference of the Cognitive Science Society. Sapporo, Japan.

Schwering, A., Gust, H., Kühnberger, K. U., & Krumnack, U. (2009). Solving geometric proportional analogies with the analogy model HDTPLinks to an external site.. In 31st Annual Conference of the Cognitive Science Society. Amsterdam, Netherlands.

Joyner, D., Bedwell, D., Graham, C., Lemmon, W., Martinez, O., & Goel, A. (2015). Using Human Computation to Acquire Novel Methods for Addressing Visual Analogy Problems on Intelligence Tests. In Proceedings of the Sixth International Conference on Computational Creativity. Provo, Utah.

…and many moreLinks to an external site.!

RPM Milestone 1

RPM Project: Milestone 1

First, make sure to read the full project overview. It contains instructions for the project as a whole and getting started with the code. This page describes only what you should do for Milestone 1 within the broader context of that project overview.

For Milestone 1, your goal is to simply demonstrate that you have set up the project infrastructure to run on your local computer and have made a submission to Gradescope. 50% of your grade on Milestone 1 is earned by meeting the minimum performance requirement; 50% of your grade is earned by completing the milestone journal.

Performance Requirement

For Milestone 1, your performance requirement is to answer one problem correctly in Gradescope. As long as you answer at least one problem correctly in a Milestone 1 Gradescope submission, you will earn the full performance credit for Milestone 1. Your answer can be hard-coded, randomly selected, etc.; the goal is simply to show that you can modify the Agent.py file to generate an answer to a problem and submit that to Gradescope.

While that is the only requirement, we highly recommend also familiarizing yourself with loading a problem from a file into Pillow and doing some initial pre-processing on it. Historically, many students have reflected that they had difficulty on the project because they started to explore using Pillow and image processing too late; if you take this opportunity to familiarize yourself with the images, you will be in a far better position to succeed going forward.

Submission Instructions

To fulfill the Performance Requirement, follow the directions on the full project overview for submitting to Gradescope, and then submit your agent to the Milestone 1 assignment.

After submitting to the Milestone 1 assignment in Gradescope, make sure to select which submission you want to have count for your graded submission. By default, Gradescope will use your latest submission, but you may want to use an earlier one. After the deadline, this will be exported to Canvas to calculate your final Milestone grade.

This is an individual assignment. All work you submit should be your own. Make sure to cite any sources you reference or code you use (in accordance with the broader class policy on code reuse).

Milestone Journal

In addition to submitting an agent to Gradescope, you will also submit a brief milestone journal to the Milestone 1 assignment in Canvas. Your Milestone Journal must be written in JDF format. There is no maximum length; we expect most submissions to be around 5 pages, but you may write more if you would like. Writing the journal is intended to be a useful exercise for you first and foremost: it should let you externalize and formalize your ideas, it should let you get feedback from your classmates, and it should let your classmates learn from you. Your journal should not include actual code; it should just include your ideas.

For Milestone 1, you may not have done much actual development on your project. Instead, this is an opportunity to begin brainstorming how you will approach the project. You should answer the following questions:

- How would you, as a human, reason through the problems on the Raven’s test? Choose three or four problems and describe your human approach.

- How do you expect you will design an agent to approach these problems? Will you try to generate a verbal representation of the images, like identifying shapes and their relative positions to one another? Will you try to use some heuristic methods, like looking for patterns in the changing numbers of shapes, number of darkened pixels, etc.? Will you have your agent select from multiple strategies based on what it sees in a particular problem? You do not need to answer all these questions specifically, but these are examples of the types of questions you may answer in previewing how you expect to design your agent. Explain why your Agent’s approach will work in addressing some of the problems identified in the first bullet point.

- As it relates to the first and second bullet point, what do you anticipate your biggest challenges in designing your agent will be and why?

- Ensure your understanding of the Lecture and/or Ed lessons is reflected in the Journal by demonstrating application of the terminology used in Lectures and/or Ed lessons.

Submission Instructions

Complete your assignment using JDF format, then save your submission as a PDF. Assignments should be submitted via this Canvas page. You should submit a single PDF for this assignment. This PDF will be ported over to Peer Feedback for peer review by your classmates. If your assignment involves things (like videos, working prototypes, etc.) that cannot be provided in PDF, you should provide them separately (through OneDrive, Google Drive, Dropbox, etc.) and submit a PDF that links to or otherwise describes how to access that material.

After submitting, download your submission from Canvas to verify that you’ve uploaded the correct file. Review that any included figures are legible at standard magnification, with text or symbols inside figures at equal or greater size than figure captions.

This is an individual assignment. All work you submit should be your own. Make sure to cite any sources you reference, and use quotes and in-line citations to mark any direct quotes.

Late work is not accepted without advance agreement via the extension request process except in cases of medical or family emergencies. In the case of such an emergency, please contact the Dean of Students.

Peer Review

After submission, your assignment will be ported to Peer Feedback for review by your classmates. Grading is not the primary function of this peer review process; the primary function is simply to give you the opportunity to read and comment on your classmates’ ideas, and receive additional feedback on your own. All grades will come from the graders alone. See the course participation policy for full details about how points are awarded for completing peer reviews.

RPM Milestone 2

RPM Project: Milestone 2

First, make sure to read the full project overview. It contains instructions for the project as a whole and getting started with the code. This page describes only what you should do for Milestone 2 within the broader context of that project overview.

For Milestone 2, your goal is to simply demonstrate that you have made some progress in creating an agent that can address the Set B problems of the Raven’s test, especially the Basic B and Test B problems. 50% of your grade on Milestone 2 is earned by meeting the minimum performance requirement; 50% of your grade is earned by completing the milestone journal.

Performance Requirement

For Milestone 2, you will earn 5% of your Milestone grade for each Basic B problem you get right up to a maximum of 25%. You will also earn 5% of your Milestone grade for each Test B problem you get right up to a maximum of 25%.

In other words: if your agent answers at least 5 Basic B and 5 Test B problems correctly, you earn full credit for the Performance Requirement of Milestone 2. Each problem fewer than that your agent can answer results in a deduction of 5% from your Milestone grade.

Submission Instructions

To fulfill the Performance Requirement, follow the directions on the full project overview for submitting to Gradescope, and then submit your agent to the Milestone 2 assignment.

After submitting to the Milestone 2 assignment in Gradescope, make sure to select which submission you want to have count for your graded submission. By default, Gradescope will use your latest submission, but you may want to use an earlier one. After the deadline, this will be exported to Canvas to calculate your final Milestone grade.

This is an individual assignment. All work you submit should be your own. Make sure to cite any sources you reference or code you use (in accordance with the broader class policy on code reuse).

Milestone Journal

In addition to submitting an agent to Gradescope, you will also submit a brief milestone journal to the Milestone 2 assignment in Canvas. Your Milestone Journal must be written in JDF formatLinks to an external site.. There is no maximum length; we expect most submissions to be around 5 pages, but you may write more if you would like. Writing the journal is intended to be a useful exercise for you first and foremost: it should let you externalize and formalize your ideas, it should let you get feedback from your classmates, and it should let your classmates learn from you. Your journal should not include actual code; it should just include a description of your agent’s approach.

Note that your Milestone 2 should be all original content created by you; if there is content that you wrote for a previous Milestone that is still pertinent, you may refer back to that content again (including quoting yourself), and then go on to discuss what has changed or what is new (or, why the same content you wrote previously is still so applicable).

For example, you might write:

In Milestone 2, I wrote that my agent works by “calculating a percentage change in the number of black pixels between each pair of frames, and checking for mathematical patterns in the changing ratio of black pixels. Then, I checked each answer option to see if it maintained the observed mathematical pattern.” For Set B, that continued to work, but I had to modify my code to include a check for exponential growth rather than just linear growth.

If you need to quote large portions of your prior writing, you can use a blockquote, or include your prior Milestone in an appendix that you refer to. The important element is that your TAs and classmates should be able to identify the new content easily.

For Milestone 2, you should answer the following questions:

- How does your agent currently function? Depending on the inner workings of your agent, there may be a lot of different ways to describe this. For example, does it select from multiple problem-solving approaches depending on what it sees in the problem? Does it perform shape recognition or direct pixel comparison? Does it generate a candidate solution and compare it to the options, or does it take each potential answer and assess its likelihood to be the correct answer? You need not answer these specific questions, but they are examples of ways you might describe your agent’s design.

- How well does your agent currently perform? How many problems does it get right on the Set B problems?

- What problems does your agent perform well on? What problems (if any) does it struggle on? Why do you think it performs well on some but struggles on others (if any)?

- How efficient is your agent? Does it take a long time to run? (Give specific metrics) Does it slow down significantly on certain kinds of problems? If so, by how much? Why do you think your agent runs slower on certain problems?

- How do you plan to improve your agent’s performance on these problems before the final project submission?

- How do you plan to generalize your agent’s design to cover 3x3 problems in addition to the 2x2 problems?

- What feedback would you hope to get from classmates about how your agent could do better? What are some of the challenges you are facing (or think you will face later) that could benefit from someone else’s feedback?

- Ensure your understanding of the Lecture and/or Ed lessons is reflected in the Journal by demonstrating application of the terminology used in Lectures and/or Ed lessons.

Submission Instructions

Complete your assignment using JDF format, then save your submission as a PDF. Assignments should be submitted via this Canvas page. You should submit a single PDF for this assignment. This PDF will be ported over to Peer Feedback for peer review by your classmates. If your assignment involves things (like videos, working prototypes, etc.) that cannot be provided in PDF, you should provide them separately (through OneDrive, Google Drive, Dropbox, etc.) and submit a PDF that links to or otherwise describes how to access that material.

After submitting, download your submission from Canvas to verify that you’ve uploaded the correct file. Review that any included figures are legible at standard magnification, with text or symbols inside figures at equal or greater size than figure captions.

This is an individual assignment. All work you submit should be your own. Make sure to cite any sources you reference, and use quotes and in-line citations to mark any direct quotes.

Late work is not accepted without advance agreement via the extension request process except in cases of medical or family emergencies. In the case of such an emergency, please contact the Dean of Students.

Peer Review

After submission, your assignment will be ported to Peer Feedback for review by your classmates. Grading is not the primary function of this peer review process; the primary function is simply to give you the opportunity to read and comment on your classmates’ ideas, and receive additional feedback on your own. All grades will come from the graders alone. See the course participation policy for full details about how points are awarded for completing peer reviews.

RPM Milestone 3

RPM Project: Milestone 3

First, make sure to read the full project overview. It contains instructions for the project as a whole and getting started with the code. This page describes only what you should do for Milestone 3 within the broader context of that project overview.

For Milestone 3, your goal is to demonstrate that you have generalized your approach out to cover the types of 3x3 problems present in Set C. 50% of your grade on Milestone 3 is earned by meeting the minimum performance requirement; 50% of your grade is earned by completing the milestone journal.

Performance Requirement

For Milestone 3, you will earn 5% of your Milestone grade for each Basic C problem you get right up to a maximum of 25%. You will also earn 5% of your Milestone grade for each Test C problem you get right up to a maximum of 25%.

In other words: if your agent answers at least 5 Basic C and 5 Test C problems correctly, you earn full credit for the Performance Requirement of Milestone 3. Each problem fewer than that that your agent can answer results in a deduction of 5% from your Milestone grade.

Submission Instructions

To fulfill the Performance Requirement, follow the directions on the full project overview for submitting to Gradescope, and then submit your agent to the Milestone 3 assignment.

After submitting to the Milestone 3 assignment in Gradescope, make sure to select which submission you want to have count for your graded submission. By default, Gradescope will use your latest submission, but you may want to use an earlier one. After the deadline, this will be exported to Canvas to calculate your final Milestone grade.

This is an individual assignment. All work you submit should be your own. Make sure to cite any sources you reference or code you use (in accordance with the broader class policy on code reuse).

Milestone Journal

In addition to submitting an agent to Gradescope, you will also submit a brief milestone journal to the Milestone 3 assignment in Canvas. Your Milestone Journal must be written in JDF format. There is no maximum length; we expect most submissions to be around 5 pages, but you may write more if you would like. Writing the journal is intended to be a useful exercise for you first and foremost: it should let you externalize and formalize your ideas, it should let you get feedback from your classmates, and it should let your classmates learn from you. Your journal need should not include actual code; it should just include a description of your agent’s approach.

Note that your Milestone 3 should be all original content created by you; if there is content that you wrote for a previous Milestone that is still pertinent, you may refer back to that content again (including quoting yourself), and then go on to discuss what has changed or what is new (or, why the same content you wrote previously is still so applicable).

For example, you might write:

In Milestone 3, I wrote that my agent works by “calculating a percentage change in the number of black pixels between each pair of frames, and checking for mathematical patterns in the changing ratio of black pixels. Then, I checked each answer option to see if it maintained the observed mathematical pattern.” For Set C, that continued to work, and in fact the patterns were easier to find because they were fit to 28 possible pairs instead of just 3.

If you need to quote large portions of your prior writing, you can use a blockquote, or include your prior Milestone in an appendix that you refer to. The important element is for TAs and classmates to be able to identify the new content.

For Milestone 3, you should answer the following questions:

- How does your agent currently function? Depending on the inner workings of your agent, there may be a lot of different ways to describe this. For example, does it select from multiple problem-solving approaches depending on what it sees in the problem? Does it perform shape recognition or direct pixel comparison? Does it generate a candidate solution and compare it to the options, or does it take each potential answer and assess its likelihood? You need not answer these specific questions, but they are examples of ways you might describe your agent’s design.

- How well does your agent currently perform? How many problems does it get right on the Set C problems?

- What problems does your agent perform well on? What problems (if any) does it struggle on? Why do you think it performs well on some but struggles on others (if any)?

- How efficient is your agent? Does it take a long time to run? (Give specific metrics) Does it slow down significantly on certain kinds of problems? If so, by how much? Why do you think your agent runs slower on certain problems?

- How do you plan to improve your agent’s performance on these problems before the final project submission?

- Looking ahead to Sets D and E, which problems do you think your agent will be able to solve at its present stage? Which problems will it struggle on?

- What feedback would you hope to get from classmates about how your agent could do better? What are some of the challenges you are facing (or think you will face later) that could benefit from someone else’s feedback?

- Ensure your understanding of the Lecture and/or Ed lessons is reflected in the Journal by demonstrating application of the terminology used in Lectures and/or Ed lessons.

Submission Instructions

Complete your assignment using JDF format, then save your submission as a PDF. Assignments should be submitted via this Canvas page. You should submit a single PDF for this assignment. This PDF will be ported over to Peer Feedback for peer review by your classmates. If your assignment involves things (like videos, working prototypes, etc.) that cannot be provided in PDF, you should provide them separately (through OneDrive, Google Drive, Dropbox, etc.) and submit a PDF that links to or otherwise describes how to access that material.

After submitting, download your submission from Canvas to verify that you’ve uploaded the correct file. Review that any included figures are legible at standard magnification, with text or symbols inside figures at equal or greater size than figure captions.

This is an individual assignment. All work you submit should be your own. Make sure to cite any sources you reference, and use quotes and in-line citations to mark any direct quotes.

Late work is not accepted without advance agreement via the extension request process except in cases of medical or family emergencies. In the case of such an emergency, please contact the Dean of Students.

Peer Review

After submission, your assignment will be ported to Peer Feedback for review by your classmates. Grading is not the primary function of this peer review process; the primary function is simply to give you the opportunity to read and comment on your classmates’ ideas, and receive additional feedback on your own. All grades will come from the graders alone. See the course participation policy for full details about how points are awarded for completing peer reviews.

RPM Milestone 4

RPM Project: Milestone 4

First, make sure to read the full project overview. It contains instructions for the project as a whole and getting started with the code. This page describes only what you should do for Milestone 4 within the broader context of that project overview.

For Milestone 4, your goal is to demonstrate that you have addressed all the problems covered by the final project. This should leave you the last part of the term to improve your agent, borrow and experiment with ideas from your classmates, and write your final, more formal report. 50% of your grade on Milestone 4 is earned by meeting the minimum performance requirement; 50% of your grade is earned by completing the milestone journal.

Performance Requirement

For Milestone 4, you will earn 2.5% of your Milestone grade for each Basic D and Basic E problem you get right up to a maximum of 25%. You will also earn 2.5% of your Milestone grade for each Test D and Test E problem you get right up to a maximum of 25%.

In other words: if your agent answers at least 10 Basic D+E and 10 Test D+E problems correctly, you earn full credit for the Performance Requirement of Milestone 4. Each problem fewer than that that your agent can answer results in a deduction of 2.5% from your Milestone grade.

Submission Instructions

To fulfill the Performance Requirement, follow the directions on the full project overview for submitting to Gradescope, and then submit your agent to the Milestone 4 assignment.

After submitting to the Milestone 4 assignment in Gradescope, make sure to select which submission you want to have count for your graded submission. By default, Gradescope will use your latest submission, but you may want to use an earlier one. After the deadline, this will be exported to Canvas to calculate your final Milestone grade.

This is an individual assignment. All work you submit should be your own. Make sure to cite any sources you reference or code you use (in accordance with the broader class policy on code reuse).

Milestone Journal

In addition to submitting an agent to Gradescope, you will also submit a brief milestone journal to the Milestone 4 assignment in Canvas. Your Milestone Journal must be written in JDF format. There is no maximum length; we expect most submissions to be around 5 pages, but you may write more if you would like. Writing the journal is intended to be a useful exercise for you first and foremost: it should let you externalize and formalize your ideas, it should let you get feedback from your classmates, and it should let your classmates learn from you. Your journal should not include actual code; it should just include a description of your agent’s approach.

Note that your Milestone 4 should be all original content; if there is content that you wrote for a previous Milestone that is still pertinent, you may refer back to that content again (including quoting yourself), and then go on to discuss what has changed or what is new (or, why the same content you wrote previously is still so applicable).

For example, you might write:

In Milestone 1, I wrote that my agent works by “calculating a percentage change in the number of black pixels between each pair of frames, and checking for mathematical patterns in the changing ratio of black pixels. Then, I checked each answer option to see if it maintained the observed mathematical pattern.” Then, in Milestone 3, I wrote that “For Set C, that continued to work, and in fact the patterns were easier to find because they were fit to 28 possible pairs instead of just 3.” However, for Sets D and E, that approach struggled because fewer problems are solvable by sequences of pixel ratio changes. So, I had to add an additional heuristic method…

If you need to quote large portions of your prior writing, you can use a blockquote, or include your prior Milestone in an appendix that you refer to. The important element is for TAs and classmates to be able to identify the new content.

For Milestone 4, you should answer the following questions:

- How does your agent currently function? Depending on the inner workings of your agent, there may be a lot of different ways to describe this. For example, does it select from multiple problem-solving approaches depending on what it sees in the problem? Does it perform shape recognition or direct pixel comparison? Does it generate a candidate solution and compare it to the options, or does it take each potential answer and assess its likelihood? You need not answer these specific questions, but they are examples of ways you might describe your agent’s design.

- How well does your agent currently perform? How many problems does it get right on the Set D+E problems?

- What problems does your agent perform well on? What problems (if any) does it struggle on? Why do you think it performs well on some but struggles on others (if any)?

- How efficient is your agent? Does it take a long time to run? (Give specific metrics) Does it slow down significantly on certain kinds of problems? If so, by how much? Why do you think your agent runs slower on certain problems?

- How do you plan to improve your agent’s design and performance on these problems before the final project submission?

- What feedback would you hope to get from classmates about how your agent could do better? What are some of the challenges you are facing (or think you will face later) that could benefit from someone else’s feedback?

- Ensure your understanding of the Lecture and/or Ed lessons is reflected in the Journal by demonstrating application of the terminology used in Lectures and/or Ed lessons.

Submission Instructions

Complete your assignment using JDF format, then save your submission as a PDF. Assignments should be submitted via this Canvas page. You should submit a single PDF for this assignment. This PDF will be ported over to Peer Feedback for peer review by your classmates. If your assignment involves things (like videos, working prototypes, etc.) that cannot be provided in PDF, you should provide them separately (through OneDrive, Google Drive, Dropbox, etc.) and submit a PDF that links to or otherwise describes how to access that material.

After submitting, download your submission from Canvas to verify that you’ve uploaded the correct file. Review that any included figures are legible at standard magnification, with text or symbols inside figures at equal or greater size than figure captions.

This is an individual assignment. All work you submit should be your own. Make sure to cite any sources you reference, and use quotes and in-line citations to mark any direct quotes.

Late work is not accepted without advance agreement via the extension request process except in cases of medical or family emergencies. In the case of such an emergency, please contact the Dean of Students.

Peer Review

After submission, your assignment will be ported to Peer Feedback for review by your classmates. Grading is not the primary function of this peer review process; the primary function is simply to give you the opportunity to read and comment on your classmates’ ideas, and receive additional feedback on your own. All grades will come from the graders alone. See the course participation policy for full details about how points are awarded for completing peer reviews.

Final RPM Project

RPM Project: Final Project

First, make sure to read the full project overview. It contains instructions for the project as a whole and getting started with the code. This page describes only what you should do for Milestone 1 within the broader context of that project overview.

For the final project submission, you will do the same thing you have done on the milestones, but you will be graded on your agent’s final performance on all Basic and Test problems. You will also write a more formal report on your final agent design. 50% of your project grade is earned based on your agent’s performance; 50% of your grade is earned by completing the final project report.

Performance Score

For the final project, you will receive 1 point for each Basic and Test problem your agent correctly solve across all 96 Basic and Test problems. Your maximum score is therefore 96/96, which would earn the full credit for your performance score. Answering 77 out of 96, for instance, would earn you an 80% for a performance score, which would be 40 points (out of 100) for your full project grade.

Submission Instructions

To earn your performance score, follow the directions on the full project overview for submitting to Gradescope, and then submit your agent to the Final Project assignment.

After submitting to the Final Project assignment in Gradescope, make sure to select which submission you want to have count for your graded submission. By default, Gradescope will use your latest submission, but you may want to use an earlier one. After the deadline, this will be exported to Canvas to calculate your final Milestone grade.

This is an individual assignment. All work you submit should be your own. Make sure to cite any sources you reference or code you use (in accordance with the broader class policy on code reuse).

Final Project Report

In addition to submitting an agent to Gradescope, you will also submit a Final Project Report to the Final Project assignment in Canvas. Your Final Project Report must be written in JDF format. It may be up to 10 pages. If you need to include more than 10 pages of content, you may include them in the appendices, but note that content in appendices will not be used for grading; it may just be useful if you want to include long sequences of images or diagrams that quickly meet the page limit.